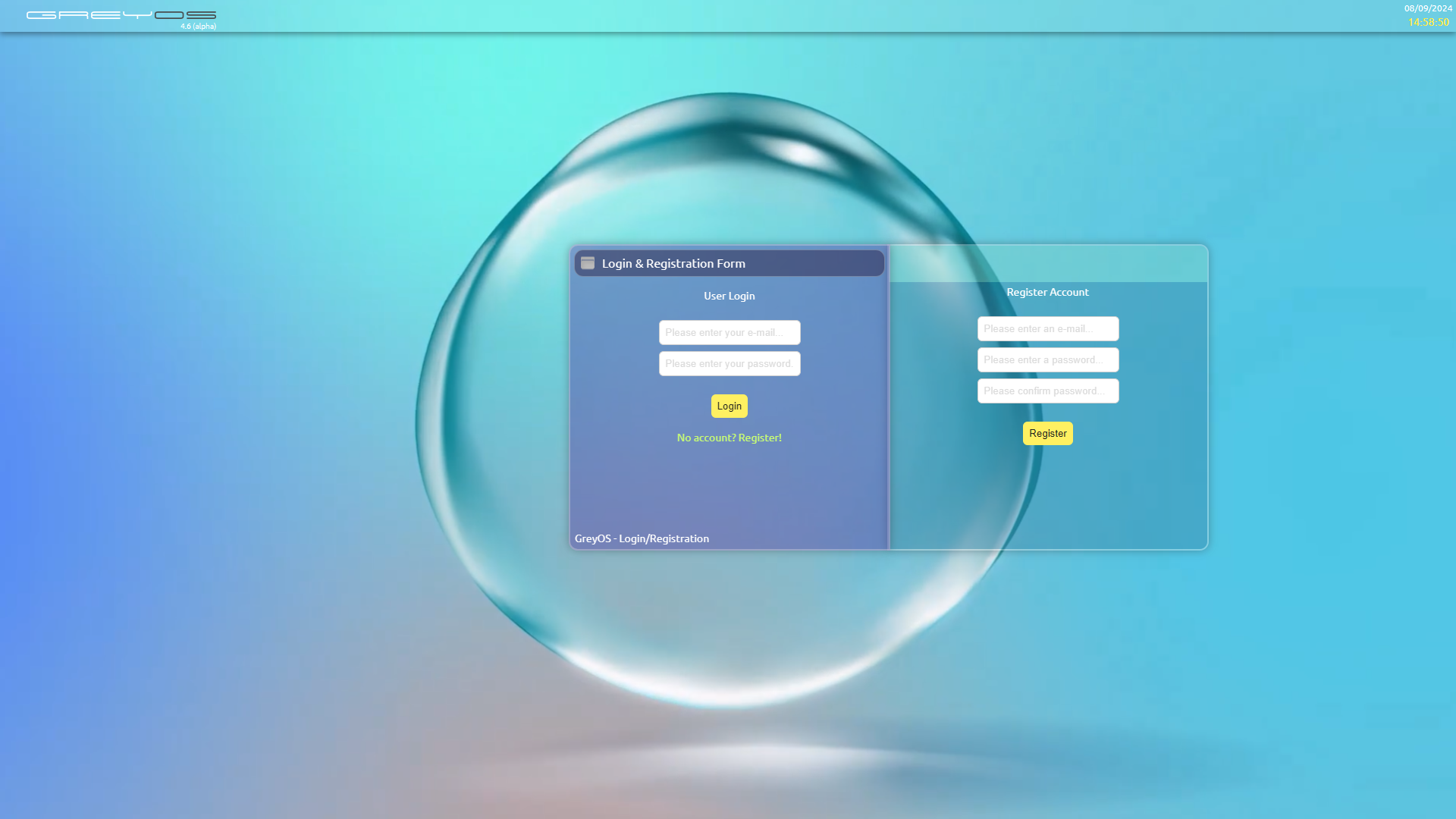

Moltbot: The Viral 'Claude with Hands' (Formerly Clawdbot)

Meet Moltbot, the self-hosted AI agent that's taking the internet by storm. Learn about its rebrand from Clawdbot, its ability to integrate with your favorite apps, and how to get it running locally.